Great animatronics without any programming knowledge

- Manual control and 24/7 automatic animatronic movements

- Easy to use, no programming knowledge required

- Open source, free to use

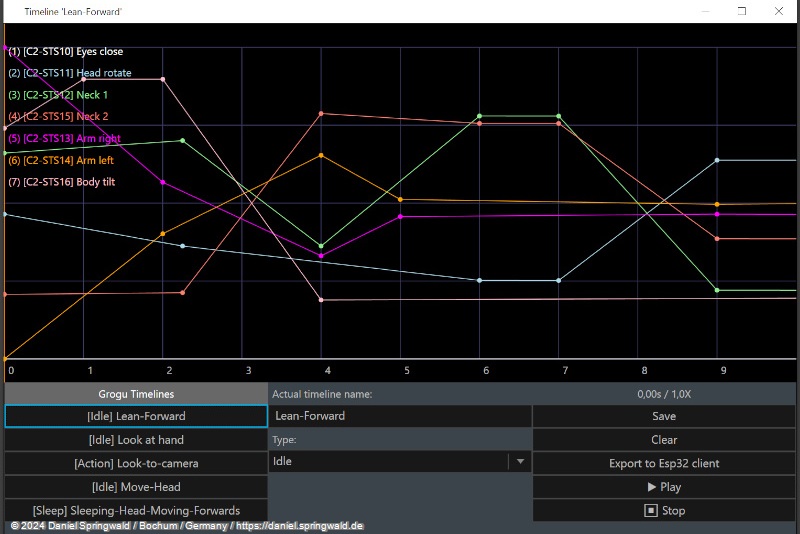

- Create motions with a graphical editor

- Supports low-cost ESP32 microcontrollers, many different servo motors, and other hardware

- Sound playback synchronized with the movements

What is Animatronic WorkBench?

In my previous animatronics projects, I always had a lot of fun with the hardware, but then I didn't put so much energy into programming the movements. That's actually a pity, because it's precisely the movements that make the characters come alive. One reason was that it is really programming, i.e. every movement had to be written in program code. This is quite tedious, often seems choppy in the result and has to be recompiled and started again and again to try it out.

For this reason, I have developed the software "Animatronic WorkBench" (AWB), which can be downloaded from ** GitHub as open source**. This allows you to create the movements of animatronic characters on the screen without programming knowledge.

Getting Started + Documentation

In the ** Animatronic WorkBench documentation** you will find instructions on how to install the software and create a first project.

There you can also download the ** supported hardware** and the FAQ.

There is also a ** Guide to creating timelines** and to Export of the project for ESP32 microcontrollers.

There is also a short YouTube interview about the project from MakerFaire 2023:

Daniel

Daniel deutsche Version anzeigen

deutsche Version anzeigen